Loggly Q&A: Driving down the DevOps Road with Larry Dragich

Larry Dragich is Director of Customer Experience Management at The Auto Club Group (ACG) in Dearborn, MI. He’s been in an IT leadership role at ACG for the past 12 years, with a focus on IT performance monitoring. Dragich is a regular blogger on APMdigest and a contributing editor on Wikipedia.

Loggly: In one of your recent blogs, you wrote that APM stands ready to bridge the gap for DevOps, becoming more than just a monitoring platform. Can you elaborate on that evolution?

Dragich: This is about APM helping these two areas of development and operations work better together. It’s key that we view APM both as a management platform and a monitoring platform. My view is that APM is becoming a DevOps toolset and an approach. It’s not just tools, but how you do the whole enterprise monitoring which includes the testing team, the development team, and the operations team.

Loggly: Can you give an example?

Dragich: At our organization, we’re using APM to partner with the testing teams before production. We take that data and metrics and compare it to the production baseline of the same application and help discern if there are any other issues after their QA testing is done. It works as long as everyone trusts each other.

Loggly: How do log metrics and related services fit into this DevOps and APM synergy?

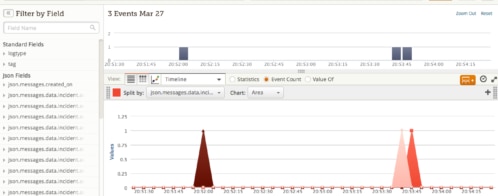

Dragich: We do log file monitoring for security aspects and when looking for certain errors. We tie log data together for basic alerting on application performance and then report that data back into our Ops Manager, which automatically creates an incident. We’re still at an infancy stage though, when you consider log file monitoring has the potential to highlight application trends or real-time performance metrics. Log analysis dovetails nicely with the trends we discussed above because you can look at logs and marry them with performance metrics. There’s a lot of potential for gaining insight from log files now that the products have matured that deliver more visibility and ease of use.

Loggly: Do IT groups have too many monitoring tools to manage?

Dragich: We are using at least six enterprise system management tools but on the periphery, we’ve got maybe a dozen or so point solutions. Many of these tools are for provisioning across different applications and infrastructure, and they just happen to do monitoring on the side. We have always embraced the idea of using a manager of manager system to get alerts from all these systems and let the subject matter experts decide what tools and data they need. There is pressure from above to eliminate some of these tools, which often happens around budget time. But as long as you have a way to correlate and integrate the data, having multiple toolsets is not necessarily a bad thing. We tie the tools and data into our ITSM incident management processes, which is important to get overall value. Otherwise you’re just generating noise with all these alerts.

Loggly: Your mission at Auto Club of Michigan is to improve the internal customer experience of IT. What success have you had in the last year doing that?

Dragich: We just started that this year at the request of our CIO, and we are looking at helping the business users. These are the people selling our insurance and delivering the roadside assistance. We want to know how well IT services them. We’re trying to measure all of that by looking at service levels, uptime and basic functionality but the deeper question is, how is the application experience? Does the software work like it should? That feedback goes back to our application development people. We’re collecting the experience feedback through the service desk and we’re starting to use automatic surveys. Right now, we are still at the conversation level, but we hope to have the surveys out soon.

Loggly: IT performance generally relates to SLAs and server health. Are you setting a higher bar for those metrics given your focus on customer experience?

Our bar for system performance and availability has always been high. The customer experience, which I equate to the end-user-experience (EUE) is at the heart of it all, and has become the focal point on how we measure IT services. This helps us make a stronger connection to the business and speak to them in a language they can appreciate.

The Loggly and SolarWinds trademarks, service marks, and logos are the exclusive property of SolarWinds Worldwide, LLC or its affiliates. All other trademarks are the property of their respective owners.

Larry Dragich