Technical Resources

Educational Resources

APM Integrated Experience

Connect with Us

This article covers the basics of using the standard logging module that ships with all Python distributions. After reading this, you should be able to easily integrate logging into your Python application.

Python comes with a logging module in the standard library that can provide a flexible framework for emitting log messages from Python programs. This module is widely used by libraries and is often the first go-to point for most developers when it comes to logging.

The module provides a way for applications to configure different log handlers and a way of routing log messages to these handlers. This can allow for a highly flexible configuration to manage many different use cases.

To emit a log message, a caller first requests a named logger. The application can use the name to configure different rules for different loggers. This logger can then be used to emit simply-formatted messages at different log levels (DEBUG, INFO, ERROR, etc.), which can be used by the application to handle messages of higher priority other than those of a lower priority. While it might sound complicated, it can be as simple as this:

import logging

log = logging.getLogger("my-logger")

log.info("Hello, world")

Internally, the message is turned into a LogRecord object and routed to a Handler object registered for this logger. The handler will then use a Formatter to turn the LogRecord into a string and emit that string.

In the following sections, we’ll review example implementations and best practices for using this module.

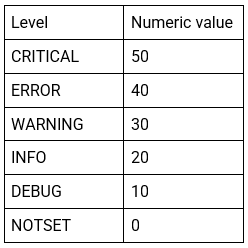

Not all log messages are created equal. Logging levels are listed here in the Python documentation; we’ll include them here for reference. When you set a logging level in Python using the standard module, you’re telling the library you want to handle all events from that level up. Setting the log level to INFO will include INFO, WARNING, ERROR, and CRITICAL messages. NOTSET and DEBUG messages will not be included here.

A well-organized Python application is likely composed of many modules. Sometimes these modules are intended to be used by other programs. Still, unless you’re intentionally developing reusable modules inside your application, you’re likely using modules available from PyPI and modules that you write yourself specifically for your application.

In general, a module should emit log messages as a best practice and should not configure how those messages are handled. That is the responsibility of the application.

The only responsibility modules have is to make it easy for the application to route their log messages. For this reason, it is a convention for each module to simply use a logger named like the module itself. This makes it easy for the application to route different modules differently, while also keeping log code in the module simple. The module needs two lines to set up logging, and then use the named logger:

import logging

log = logging.getLogger(__name__)

def do_something():

log.debug("Doing something!")

That is all there is to it. In Python, __name__ contains the full name of the current module, so this can simply work in any module.

Your main application should configure the logging subsystem so log messages go where they should. The Python logging module provides many ways to fine-tune this, but for almost all applications, the configuration can be straightforward.

A configuration generally consists of adding a Formatter and a Handler to the root logger. Because this is so common, the logging module provides a utility function called basicConfig that handles most use cases.

Applications should configure logging as early as possible, preferably as the first thing in the application, so logged messages do not get lost during startup.

Finally, applications should wrap a try/except block around the main application code to send any exceptions through the logging interface instead of just to stderr. This is known as a global try-catch handler. It should not be where you handle all your exception logging and you should continue to plan for exceptions in try-catch blocks at necessary points in your code as a rule of thumb.

For specific Python Logging recipes, the Python Logging Cookbook is a great reference, but it can be a bit overwhelming for beginners. In the following sections, we’ll look at some example configurations to help you hit the ground running with the logging module.

These day, this can be the simplest and best option for configuring logging these days. When using systemd to run a daemon, applications can just send log messages to stdout or stderr and have systemd forward the messages to journald and syslog. As an additional perk, this does not require catching exceptions, as Python already writes those to standard error. That said, follow proper conventions and handle your exceptions.

In the case of running Python in containers like Docker, logging to standard output is often the easiest move as this output can be directly and easily managed by the container itself.

import logging

import os

logging.basicConfig(level=os.environ.get("LOGLEVEL", "INFO"))

exit(main())

That’s it. The application will now log all messages with level INFO or above to stderr, using a simple format:

ERROR:the.module.name:The log message

The application can even be configured to include DEBUG messages, or maybe only ERROR, by setting the LOGLEVEL environment variable.

However, a potential problem with this solution is that exceptions are logged as multiple lines, which can cause problems for later analysis. Sadly, configuring Python to send multi-line exceptions as a single line is often not quite as simple, but certainly possible. Note the implementation here below, calling logging.exception is a shorthand equivalent to logging.error(..., exc_info=True).

import logging

import os

class OneLineExceptionFormatter(logging.Formatter):

def formatException(self, exc_info):

result = super().formatException(exc_info)

return repr(result)

def format(self, record):

result = super().format(record)

if record.exc_text:

result = result.replace("\n", "")

return result

handler = logging.StreamHandler()

formatter = OneLineExceptionFormatter(logging.BASIC_FORMAT)

handler.setFormatter(formatter)

root = logging.getLogger()

root.setLevel(os.environ.get("LOGLEVEL", "INFO"))

root.addHandler(handler)

try:

exit(main())

except Exception:

logging.exception("Exception in main(): ")

exit(1)

The alternative is to send it directly to syslog. This is great for older operating systems that don’t have systemd. In an ideal world, this should be simple, but sadly, Python requires a bit more elaborate configuration to be able to send Unicode log messages. Here is a sample implementation.

import logging

import logging.handlers

import os

class SyslogBOMFormatter(logging.Formatter):

def format(self, record):

result = super().format(record)

return "ufeff" + result

handler = logging.handlers.SysLogHandler('/dev/log')

formatter = SyslogBOMFormatter(logging.BASIC_FORMAT)

handler.setFormatter(formatter)

root = logging.getLogger()

root.setLevel(os.environ.get("LOGLEVEL", "INFO"))

root.addHandler(handler)

try:

exit(main())

except Exception:

logging.exception("Exception in main()")

exit(1)

Another option is to log messages directly to a file. This is rarely useful these days, as administrators can configure syslog to write certain messages to specific files – or if deploying inside containers, this is an anti-pattern. Also, if you use centralized logging,dealing with additional log files is an added concern. But it is an option that’s still available.

When logging to files, one of the main thing to be wary of is that log files need to be rotated regularly. The application needs to detect the log file being renamed and handle that situation. While Python provides its own file rotation handler, it is often best practices to leave log rotation to dedicated tools such as logrotate. The WatchedFileHandler will keep track of the log file and reopen it if it is rotated, making it work well with logrotate without requiring any specific signals.

Here’s a sample implementation.

import logging

import logging.handlers

import os

handler = logging.handlers.WatchedFileHandler(

os.environ.get("LOGFILE", "/var/log/yourapp.log"))

formatter = logging.Formatter(logging.BASIC_FORMAT)

handler.setFormatter(formatter)

root = logging.getLogger()

root.setLevel(os.environ.get("LOGLEVEL", "INFO"))

root.addHandler(handler)

try:

exit(main())

except Exception:

logging.exception("Exception in main()")

exit(1)

You can also use the default Python logging module to emit log messages to an HTTP endpoint. The HTTPHandler can be useful when you are running in a PaaS and don’t have direct host access to set up syslog or are behind a firewall that blocks outbound syslog, and can be used to log directly to centralized logging systems like SolarWinds® Loggly®.

Here’s a simple Python script that uses the HTTPHandler to emit a warning message to a Loggly HTTP endpoint.

# Import logging.handlers module

import logging.handlers

# Create the demo handler

demoHandler = logging.handlers.HTTPHandler('logs-00.loggly.com', '/inputs/<TOKEN>/tag/http/', method= 'POST')

logger = logging.getLogger()

# Add the handler we created

log = logger.addHandler(demoHandler)

# Emit a log message

logger.warning("Warning Message!")

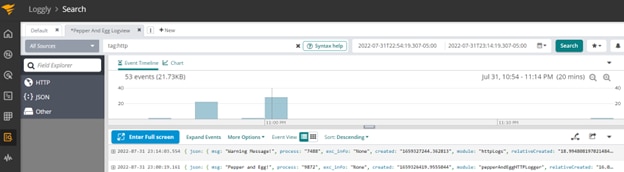

Log messages in Loggly created by the HTTPHandler in Python.

It is possible to use other log destinations, and certain frameworks make use of this (e.g., Django can send certain log messages as email). Many of the more elaborate log handlers in the logging library can easily block the application, causing outages simply because the logging infrastructure was unresponsive. For these reasons, it is often best to keep the logging configuration of an application as simple as possible.

A growing trend in logging is to separate it as much as possible from the core functionality of your application. That way, you can have different behavior in different environments or deployment contexts. Using the HTTPHandler with a system like Loggly is a simple way you can more easily achieve this directly in your application.

When deploying to containers, try to keep things as simple as possible. Log to standard out/err and rely on your container host or orchestration platform to handle figuring out what to do with the logs. You can still use log centralization services, but with a sidecar or log shipper approach.

If a fine-grained configuration is desirable, the logging module also provides the ability to load the logging configuration from a configuration file. This can be quite powerful but rarely necessary. When loading logging configuration from a file, specify disable_existing_loggers=False. The default, which is there for backward compatibility only, will disable any loggers created by modules. This can break many modules, so use this with caution.

Logging in Python is often simple and well-standardized, thanks to a powerful logging framework right in the standard library.

Many times modules should simply log everything to a logger instance for their module name. This can make it easy for the application to route log messages of different modules to different places, if necessary.

Applications then have several options to configure logging. In a modern infrastructure, though, following best practices helps simplify this a great deal. Unless specifically needed, simply logging to stdout/stderr and letting the system or your container handle log messages can be sufficient and the best approach.

Last updated: 2022