Technical Resources

Educational Resources

APM Integrated Experience

Connect with Us

Before the cloud computing era, applications often logged to files on a server. Administrators would need to log in to dozens, hundreds, or even thousands of systems to search through log files. Modern applications can have thousands of different components running on dozens of different services, making file-based logs obsolete. Instead, developers can use centralization to consolidate logs into a single location.

Using centralization tools and services, you can:

This section explains how to centralize logs from standalone Python applications, as well as Python applications running in Docker. We will demonstrate these methods using MDN Local Library, a Django-based application provided by MDN. Django uses the standard Python logging module, which is also used by many other Python frameworks.

Two common methods for centralizing Python logs are syslog and dedicated log management solutions.

Syslog is a widely used standard for formatting, storing, and sending logs on Unix-based systems. Syslog runs as a service that collects logs from applications and system processes, then writes them to a file or another syslog server. This can make it incredibly useful for centralization.

However, syslog does have limitations. While widely supported, the syslog format is a primarily unstructured format with little support for non-standard fields or multiline logs. Since logs are stored as plaintext, searching can be slow and difficult. Also, because logs are stored on a single host, there’s risk of losing log data if the host fails or the file becomes corrupt.

The SysLogHandler logs directly from Python to a syslog server. In this example, we’ll send logs of all levels to a local syslog server over UDP:

LOGGING = {

'version': 1,

'handlers': {

'syslog': {

'level': 'DEBUG',

'class': 'logging.handlers.SysLogHandler',

'facility': 'local7',

'address': ('localhost', 514)

}

},

'loggers': {

'django': {

'handlers': ['syslog'],

'level': 'DEBUG'

},

}

}

After starting the application, logs will start to appear in /var/log/syslog:

$ sudo grep 'django' /var/log.syslog

Sep 26 11:24:36 localhost (0.000) SELECT "django_migrations.""app," "django_migrations.""name" FROM "django_migrations"; args=()

Sep 26 11:24:43 localhost (0.000) SELECT "django_session.""session_key," "django_session.""session_data."..

Sep 26 11:24:43 Exception while resolving variable 'is_paginated' in template 'index.html'.#012Traceback (most recent call last):#012...

Sep 26 11:24:43 localhost (0.000) UPDATE "django_session" SET "session_data" =...

Log management solutions, such as SolarWinds® Loggly®, are built to ingest, parse, index, and store large volumes of log data. Compared to syslog, they can provide better scalability, better protection against data loss, improved search performance, and more ways of interacting with log data. For example, Loggly is designed to provide a web-based user interface, querying language, real-time monitoring, and third-party integrations to name a few.

There are also logging solutions built to support logging directly from code using a custom library or one of the standard library’s built-in handlers. In this example, we use the Loggly Python handler in combination with python-json-logger to send JSON-formatted logs to Loggly over HTTPS. Using JSON can allow Loggly to automatically parse out each field while keeping the logs both readable and compact. Before using this code snippet, make sure to replace TOKEN in the URL field with your actual Loggly token. You can also set a custom tag by replacing “python” in the URL.

LOGGING = {

'version': 1,

'formatters': {

'json': {

'class': 'pythonjsonlogger.jsonlogger.JsonFormatter'

}

},

'handlers': {

'loggly': {

'class': 'loggly.handlers.HTTPSHandler',

'level': 'DEBUG',

'formatter': 'json',

'url': 'https://logs-01.loggly.com/inputs/TOKEN/tag/python',

}

},

'loggers': {

'django': {

'handlers': ['loggly'],

'level': 'DEBUG'

}

}

}

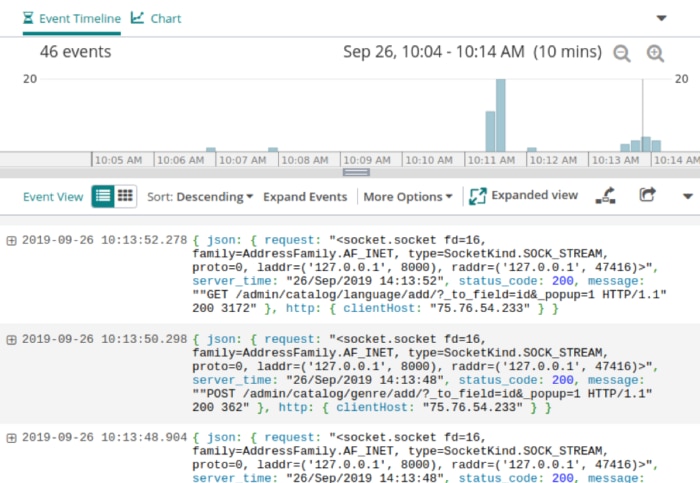

When you run the application, the parsed logs are built to appear in Loggly:

Viewing Django logs in SolarWinds Loggly. © 2019 SolarWinds, Inc. All rights reserved.

The challenge with logging Docker containers is they often run as isolated, ephemeral processes. Many common logging methods cannot work as effectively in this architecture. However, there are still ways to centralize Docker logs.

The Logspout container collects logs from other containers running on a host and forwards them to a syslog server or other destination. This is an easy way to collect logs since it is designed to automatically work for all containers and requires extremely little configuration. The only requirement is containers must log to standard output (STDOUT and STDERR).

For example, the following command routes all logs to a local syslog server:

$ docker run--name="logspout"--volume=/var/run/docker.sock:/var/run/docker.sock gliderlabs/logspout syslog://syslog.server:514

There is also a version that can route logs to Loggly. Before running this command, make the following replacements:

<token>: Your Loggly customer token.<tags>: A comma-separated list of tags to apply to each log event.<filter>: Which containers to log. Remove the FILTER_NAME parameter to log all containers.$ docker run -e 'LOGGLY_TOKEN=<token>' -e 'LOGGLY_TAGS=<tags>' -e 'FILTER_NAME=<filter>'--volume /var/run/docker.sock:/tmp/docker.sock iamatypeofwalrus/logspout-loggly

The Docker logging driver is a service that automatically collects container logs written to STDOUT and STDERR. The driver logs to a file by default, but you can change this to a syslog server or other destination. Logging to a file can also allow you to use the docker logs command to view a container’s logs quickly.

Configuring the logging driver can be problematic with multiple servers since each server must be individually configured. Because of this, we don’t recommend it for large or production deployments.

Lastly, you can use a logging library to send logs directly from your application. However, this method is often discouraged since it means connecting each individual container to your centralization service, which consumes resources and can make it harder to deploy changes. You also lose valuable metadata only available using other methods, such as the container name and hostname.

When developing your centralization strategy, consider these recommendations:

STDOUT and STDERR, and use Logspout to centralize them. Logging containers to standard output can allow both the Docker logging driver and Logspout to read and manage your container logs.Last updated: 2022