Aggregating Logs From Microservices—Best Practices

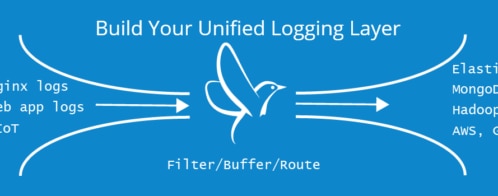

Depending on where you are on your journey with microservices, you may have noticed visibility into the system can be a bit tricky at times. Well, there’s good news. Not knowing what’s going on in the system is a solvable problem. One of the first things you can do is get your logs in order. And one of the best ways of doing so is aggregating your logs into a single logging service. In this blog post, we’ll go through things to look for in an aggregating service and how to best use those logs.

What Is an Aggregating Log Service?

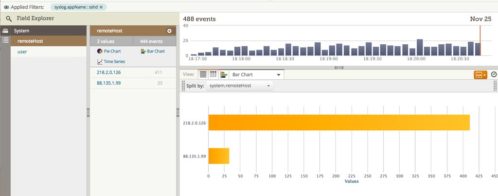

A log aggregation service is usually a product to help you put logs from different services in one place. This is useful because logs are usually some of the first things an operation team will look at. It’s also useful because, depending on the industry you’re in, there may be compliance or regulatory standards you have to adhere to, and having your logs in one place can help meet this need.

What Needs to Be in a Log to Help It Get Aggregated?

To be sure you get the most from your logs, there are a few things you’ll need to do to ensure they’re useful and any service can understand them. Doing a little bit of work upfront on each of your services, and maybe even creating a library or set of tools you can use in between your services, can be a great first step at ensuring your logs are helpful and insightful.

One thing you’ll need to do is create a common format. Then you’ll need to ensure logs can be grouped by action. And to help protect data leaks, you need to make sure certain fields are scrubbed or not included.

Establish a Common Format Between Logs

One of the first things you want to do to ensure a logging aggregator is a success is get your log formatting down. This means every service has the same format and some commonly established fields, so when you need to combine logs or view them across services, you can query commonly known fields, and you won’t have to guess which format the service may be outputting. For example, you don’t want to mix text, xml, and JSON across all your services. It would be a nightmare to try and figure out what’s going on.

One of the most common logging formats today is outputting a JSON object as the log information. The most common systems (log4j, log4net, bunyan, etc.) have a way to output JSON event data for their logs. Because log information is data, JSON naturally lends itself to having all the extra metadata information you need alongside the event messages. JSON also has a plethora of tools you can use for data analysis later if you desire.

With the common format, you can be sure there are a set of common fields among the different services. A good place to start is determining which service will produce the logs. If you’re in a Kubernetes environment, even having pod- and container-level information can be extremely helpful. Same goes for a timestamp that records when this log event occurred and some metadata about the request, so you can tie logs together from different services.

Ensure You Can Trace a Request Through Your System

Once you have a common set of fields, you need a way to tie requests through the system together. A couple of different open tracing projects can assist with this. You want to be able to trace a request or task through the system throughout each and every service. This ensures if there are issues with a certain type of request, you can use the logging system to help you determine what needs to be fixed.

You’ll want to group or filter event data by request, so it’s easy to identify the full path through the service. It’s important for the service itself to log or have a way to indicate all the work it individually did, and you need to be able to see the request through all your different microservices. Thankfully, there’s usually a decent tracing library out there for whatever system you are developing for.

Establish Who Can Access the Logs

Another benefit of aggregating your logs is the ability to centrally manage access to them. In certain sectors of the economy, you need to provide auditors with a comprehensive list of who can view and access log data. Manually creating this type of aggregated access list across many microservices is impractical.

Another benefit is you can lock down your sources of logs. Instead of giving users broad access to most cloud logging systems, you can refine the access per log source to make sure teams have the right view into the system while also maintaining security and compliance.

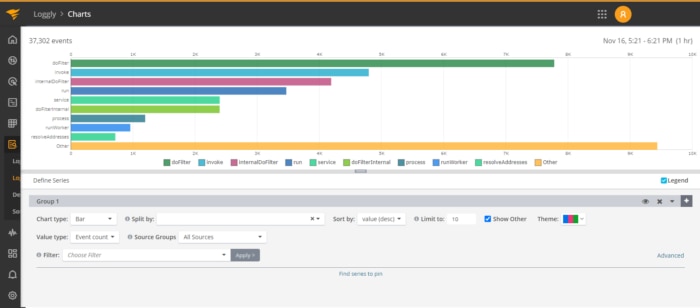

Finally, Alerting

Now that you have all your logs scrubbed and stored in one place, you can move to the important task of making sure your system is performing as designed. . A common technique is monitoring the logs for early indications of issues and sending alerts when certain criteria are met. A comprehensive monitoring service can process all your log data and send out alerts to let you know of a problem before your customers are impacted.

Conclusion

I hope this blog post helps you understand how a log aggregator can be helpful and what to look for in a logging system. A good logging solution can help you get the most out of your logs and identify issues early.

Now that you’ve a good grasp of what a log aggregation service looks like, you should look at the logging services provided by SolarWinds® Loggly®. It can help you aggregate your event data into one place using the techniques I’ve mentioned above, and a free trial is waiting for you.

This post was written by Erik Lindblom. Erik has been a full stack developer for the last 13 years. During that time, he’s tried to understand everything that’s required to deliver high quality, valuable software. Today, this means using cloud services, microservices techniques, and container technologies. Tomorrow? Well, he’s ready to find out.

The Loggly and SolarWinds trademarks, service marks, and logos are the exclusive property of SolarWinds Worldwide, LLC or its affiliates. All other trademarks are the property of their respective owners.

Loggly Team