Loggly Q&A: Unearthing the Value of Dark Data

Jon Gifford is Loggly’s Chief Search Officer. We spoke with him today about the concept of dark data, and why it’s a hidden gold mine to unify IT and business. Jon fell in love with search when he realized that it was the best way of manipulating huge amounts of messy, constantly evolving data. For more than 15 years, he has been working on systems that make that power available to people who just want to get things done.

Loggly: “Dark data” sounds pretty scary. How would you define it?

Gifford: Dark data is any data sitting around on your systems that you don’t have easy access to or you’re not extracting value from because you don’t have the right tools. It can be nearly any type of data such as application log files or system log files that only get reviewed when there’s a problem, if at all. Basically, dark data is data that you don’t know that you have or that is hidden in silos. It’s only “scary” if you neglect to make proper sense of it.

Loggly: You mentioned log files. What other data types constitute dark data?

Gifford: Log files are the poster child, but you can also think of data hidden in a database that’s hard to access. You might have a customer ID but you want to find the customer name or company, and it’s in different systems. You’ve got to jump through hoops to merge that data and find your answer. So the database itself isn’t dark in terms of its main users, but the fact that it is siloed means you’re not getting as much value as you could from it.

Modern service oriented architectures commonly have REST APIs that provide a lot of valuable data about the state of the service, but if you’re not collecting this on a regular basis it is effectively lost. Automated gathering of that that data is easy and provides a hugely valuable data set that can be correlated directly with the logs those services also generate.

Finally, in a virtualized environment, there can be data that is not just dark, but ephemeral. When a virtual machine goes away, you may also lose all of the data was resident on its local virtual disks. Virtualization gives you elasticity, but you also have to consider what data is lost when you shrink your computing resources.

Loggly: How does data get hidden or dark? Has the problem worsened over the years?

Gifford: Growing application complexity and data density have been big contributing factors. The more complex your systems are, the harder it is to identify data and discern its value. A typical system these days uses tens or hundreds of different libraries and many third party applications or services, each of which does its own thing. Staying on top of all of this is tough. The fact that storage continuously drops in price also means that you can afford to be a little less careful about how you’re using it, so there is a natural tendency for data on disk to grow over time.

There’s a dearth of good tools to help uncover the value of dark data. The ability to run a huge MapReduce job to generate analytics has only recently become an affordable way to manage all of this data. What I see happening is that the available tools for managing big data and dark data are only getting better. But you still have to make the mental leap to feed all the data into the system and expect it to unearth useful things.

If you compare what’s available today compared with older tools such as grep, sed, sort, uniq, awk, perl, we are at a different level now. Because we can bring the full power of modern search and analytics tools to bear on the raw data, we can do far more, far more easily. Not only that, because this power is available as a service, every improvement we make is available to every user, immediately. You don’t need to be an expert in extracting data anymore; instead, you can focus on using that data.

Loggly: What’s the difference between big data and dark data?

Gifford: There’s more dark data than big data, because many companies don’t have the tools to work with all of their data yet. It’s usually less structured and has more variety than big data, because the existing big data tools are most effective on clean or condensed content. These tools can also be used to create clean data from messy data, but it’s not uncommon to filter out the noise before it hits your big data store. As well, the cognitive load for understanding dark data is much higher. It doesn’t necessarily have the same self-descriptive information as other data types. For instance, while log files have a lot of valuable information, there are also plenty of logs that don’t deliver any direct value to IT or the business. Dark data is only dark because the tools largely don’t exist to shine light on it.

Loggly: How does Loggly help with the dark data challenge?

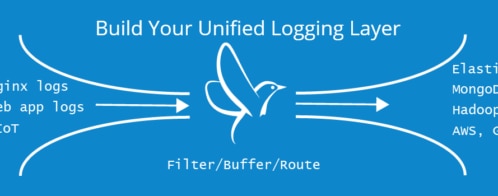

Gifford: If you can use a tool like Loggly, you can now send a much broader and larger set of data in for analysis. You can send not just application logs but system logs – basically anything being written to a file in a text form you can send through Loggly. You can also send JSON data, direct from your applications. Loggly makes it far easier to look at all this data; we’ve tried to build a system that doesn’t constrain what you can send. As well, the analytics in Loggly are more powerful than what you could do with older tools. You have all the data in one place and you don’t need to correlate data across different machines and formats. This opens up a lot of doors for dark data.

Loggly: Once you’re able to uncover dark data, how do you put it to use?

Gifford: If something is happening with an app or service, now you don’t have to physically get into the box to try and figure out what’s going on. You can get to the data ASAP using Loggly or whatever repository you have. You can review your daemon logs and see how much mail your organization is sending without having to actually look through the details of all those messages. IT people can get answers faster and be more proactive. But you still need people who are able to interpret the data and understand how it can be reused to solve issues or track events and metrics over time.

Many organizations still don’t understand the importance of gaining an historical perspective on data. So if you’ve got all this previously dark data now available, you can look back and understand whether the situation that is happening right now is actually normal behavior based on typical patterns of activity or if there’s something potentially bad going on. This turns the unknown unknowns of dark data into “known knowns” that help you understand your systems at a much deeper level.

Loggly: What’s the potential for dark data?

Gifford: The future is the tools will become more powerful and cheaper and faster. Therefore, the friction will gradually decrease and more and more dark data will be collected for analysis. But that doesn’t mean things get easier. Engineers and system administrators today put restraints on what they will log because of the cost of I/O and disk. But in a perfect world, every application would log at the trace or debug level. Over time, with more data, organizations will get a higher quality of data. And our use of this data will evolve from debugging an application to using that same analysis for monitoring, and then even passing along to the product team for help in building more relevant products. At some point, the sales team might want to look at that data for information on how new features correlate to the pipeline. So, dark data use can potentially accelerate around the entire company. It will be easier to do this, because IT departments won’t be managing eight or 10 different tools. Instead, they’ll be able to look at everything in one place and share that information across the business.

Loggly: Is Loggly going to be that central tool?

Gifford: With our rapid growth, Loggly is on the right path to be that central place to look for and share data across the business. Data wants to be free, and at Loggly we want to democratize the insights. We challenge ourselves to be the poster child.

People may not know that you can also send structured data to Loggly, not just logs. This is an evolution of understanding as much as an evolution in the technology. Because Loggly is designed to scale massive amounts of data and has a built-in analytics tool, we offer an interesting alternative view.

Today, we have ops, developers, and product and business managers utilizing Loggly inside our company. The insights we gain help us manage our environment, optimize product development cycles, and communicate with customers in the most relevant manner. With previously dark data from log files, our marketing team leverages customer product and feature use to recommend additional use cases to explore, and our sales team gains visibility into any struggle as customers adopt features.

The Loggly and SolarWinds trademarks, service marks, and logos are the exclusive property of SolarWinds Worldwide, LLC or its affiliates. All other trademarks are the property of their respective owners.

Jon Gifford