Technical Resources

Educational Resources

APM Integrated Experience

Connect with Us

Logs are crucial for solving issues. In a microservices environment, logging is even more important. More moving parts mean more dependencies. It’s also less obvious where to start debugging when issues occur. In a Kubernetes environment, where typical systems consist of dozens or even hundreds of containers, solid logging architecture is crucial.

Kubernetes provides some basic logging capabilities, but for a bulletproof production-grade solution, you need to implement something more. In this post, you’ll learn how to get started with Kubernetes cluster-level logging.

Let’s start with reviewing logging architecture in the Kubernetes cluster. We know the importance and the main purpose of log files, right? We need them mainly for debugging issues. It means we often need to answer questions like “what happened to the application on this day at this time?” However, by default in the Kubernetes cluster, a pod’s logs have the same lifetime as the pod. It means when the pod dies, logs disappear. Fortunately, nowadays, most Kubernetes clusters allow you to access logs from the previous instance of a pod. So if your pod crashed but a new one is already running, you can still access logs from the crashed container.

But what if you got a report of an issue that happened, for example, three days ago? You check, and you see since then the container has been restarted/evicted/upgraded multiple times. You won’t be able to see the logs of the instance a few versions back. Unless you implement cluster-level logging.

What is cluster-level logging? It’s a solution to the above problem (and other problems we haven’t mentioned yet). Cluster-level logging is a concept of separating the log storage and life cycle from pods and nodes. In other words, Kubernetes is no longer responsible for logs, and a separate service or tool collects and manages the logs. The most obvious benefit is logs are separated from the cluster and are shipped outside the pod. Therefore, you no longer lose logs when a pod dies.

In order to implement cluster-level logging for your Kubernetes cluster, you need two main things: a logging agent and logging back end.

The logging agent is responsible for extracting logs from the pod and forwarding them to your desired destination. A logging back end can be a tool, a service, or a clustered solution responsible for storing and processing your logs. Your productivity and debugging capabilities will mainly depend on the quality of your logging back end. There are many solutions on the market, from simple storage, like tools only performing basic log aggregation, to services offering sophisticated anomaly detection mechanisms, live tailing, and integration with the rest of the monitoring tools you may have.

Now that you know what benefits cluster-level logging brings and how it works, it’s time to explain how you can implement it in your cluster. First, you need to decide where to place the logging agents. The two most common approaches are on the nodes or as sidecar containers on the pods. The first option requires installing a login agent on every Kubernetes node. Every logging agent will then gather logs from all containers running on the same node. The second option requires deploying a logging agent as a sidecar container for each pod. Logging agents will then be responsible for gathering logs only from the containers running in the same pod.

As you may guess, running logging agents on the nodes is easier and also more efficient. For example, if you have 10 nodes in a cluster with 50 containers running on each node, you’ll only need 10 logging agents running to gather logs from all containers in the cluster when using a logging-agent-per-node approach. With the sidecar approach, you’d need 500 logging agents (one for each pod).

It doesn’t mean, however, node-based logging agents are better. It all depends on your use case. In fact, you can even run a mixed solution with node-based logging agents gathering logs from most containers and sidecar logging agents for a few edge case applications. Sidecar logging agents are useful, for example, when an application can’t stream its logs to standard output and standard error streams. Or when you only need to stream logs from one part of an application and don’t care about the rest.

If you’re looking for the easiest way to get the logs out of your cluster, take a look at rKubeLog. It doesn’t require DaemonSets, sidecars, fluentd, or persistent claim. So you can get it up and running within minutes. To read more about setting up rKubeLog, check out the documentation.

Once you decide which type of logging agents to run, you need to determine where to send your logs. As we mentioned earlier, this could be a dedicated application running on the cluster itself, software running outside your Kubernetes cluster, or even a dedicated cloud-based management solution.

What’s best here will also depend on your use case. If you have many well-trained engineers in house and can put in the necessary maintenance efforts, building your own logging back end may be an option for you. You need to be aware, however, feature-rich solutions for logging back ends are much more beneficial for your company than simple systems. Microservice architecture is often complicated, and there are many dependencies between containers. Therefore, debugging isn’t always as simple as finding error messages.

That’s why cloud-based management solutions are worth checking out. They offload you from all the installation and maintenance efforts, but most importantly, they usually offer predictive features to help you find the issues before they make a real user impact. How is this possible? A modern log analytics tool can integrate with your CI/CD pipeline and monitoring tools and perform advanced anomaly detection. They also “understand” all the layers of your infrastructure (containers, orchestrators, and virtual machines) and can correlate data that can be extremely useful for debugging.

For example, imagine your users are experiencing slow loading times in your web application. You see the CPU usage of your nodes is high. But is that the cause or a consequence? Traditionally, you’d need to check a few different metrics, logs, and the dashboard to find an answer. With a good log management tool, you may get the answer before you even realize there’s an issue.

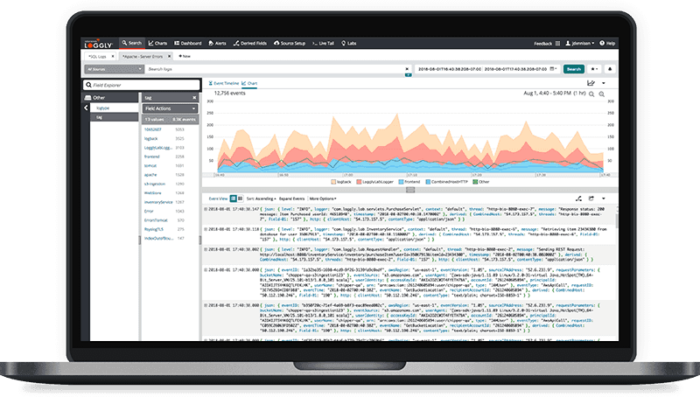

One of these log management services is SolarWinds® Loggly®. It offers a wide range of integrations, making cluster-level logging easy to implement. It also offers some unique features, greatly improving root cause analysis.

Deciding on the logging agent strategy and choosing the logging back end are the two main tasks needed to start Kubernetes cluster-level logging. That’s the majority of the job, but not all. You need to remember two additional things.

First, logs are meant to help you solve issues. Therefore, try to design your solution in a way that will help you find the root cause of issues faster. Logs, when not aggregated properly, can create a large number of useless messages.

Secondly, be aware the more microservices you have, the more logs you’ll have to deal with. If you give your developers total freedom over logging, you may quickly see gigabytes of logs created per day. INFO messages may be beneficial for developers, but they aren’t crucial for debugging. Tools like Loggly can significantly improve debugging, but you’ll need to do yourself a favor and spend some time on a basic log cleaning. Try to avoid sending health check messages, which can be generated every second by, for example, 100 containers. That’s 100 unimportant messages per second. It’s easy to instruct your logging agent to filter these out.

Thirdly, take your debugging efforts to the next level by integrating logs with other monitoring data, like metrics. You can do this with the Loggly and SolarWinds AppOptics™ integration. Combining logs with infrastructure performance metrics helps to spot issues faster and find a root cause faster too. You can read more about it in this blog post.

In this post, we discussed Kubernetes logging architecture. We focused on the cluster-level logging concept as it brings many benefits over default Kubernetes logging. And benefits when it comes to logs are important. When your application is down or your users experience issues, reading logs is like mining gold. You know somewhere you’ll find one message to will help you fix your application, but you first need to read lots of generic log entries. Therefore, any automation, anomaly detection mechanism, or proactive monitoring module is worth its weight in gold. If you want to test one of these gold mining machines, sign up here for your free Loggly account.

This post was written by Dawid Ziolkowski. Dawid has 10 years of experience as a Network/System Engineer at the beginning, DevOps in between, cloud Native Engineer recently. He’s worked for an IT outsourcing company, a research institute, telco, a hosting company, and a consultancy company, so he’s gathered a lot of knowledge from different perspectives. Nowadays he’s helping companies move to cloud and/or redesign their infrastructure for a more cloud Native approach.