Benchmarking Java logging frameworks

One of the most common arguments against logging is its impact on your application’s performance. There’s no doubt logging can cost you some speed; the question is how much. When you’re armed with some real numbers, it’s much easier to find the right amount to log. In this article, we’ll compare the performance and reliability of four popular Java logging frameworks.

The Contenders

For this test, we investigated four of the most commonly used Java logging frameworks:

- Log4j 1.2.17

- Log4j 2.3

- Logback 1.1.3 using SLF4J 1.7.7

- JUL (java.util.logging)

We tested each framework using three types of appenders: file, syslog, and socket. For syslog and socket appenders, we sent log data to a local server over both TCP and UDP. We also tested asynchronous logging using each framework’s respective AsyncAppender. Note that this test doesn’t include asynchronous loggers, which promise even faster logging for Log4j 2.3.

Setup and Configuration

Our goal was to measure the amount of time needed to log a number of events. Our application logged 100,000 DEBUG events (INFO events for JUL) over 10 iterations (we actually did 11 iterations, but the first was discarded due to large startup times to warm the JIT). To simulate a workload, we generated prime numbers in the background. We repeated this test three times and averaged the results. This stress test also drives the logging frameworks harder than they would in a typical workload because we wanted to push them to their limit. For example, in a typical workload, you won’t see as many dropped events, because events will be more spread out over time, allowing the system to catch up.

We performed all testing on an Intel Core i7-4500U CPU with 8 GB of RAM and Java SE 7.

In the interest of fairness, we chose to keep each framework as close to its default configuration as possible. You might experience a boost in performance or reliability by tweaking your framework to suit your application.

Appender Configuration

We configured our file appenders to append entries to a single file using a PatternLayout of %d{HH:mm:ss.SSS} %-5level - %msg%n. Our socket appenders sent log data to a local socket server, which then wrote the entries to a file (see this link for an example using Log4j 1.2.17). Our syslog appenders sent log data to a local rsyslog server, which then forwarded the entries to Loggly.

The AsyncAppender was used with the default configuration, which has a buffer size of 128 events (256 events for Logback) and does not block when the buffer is full.

Test Results

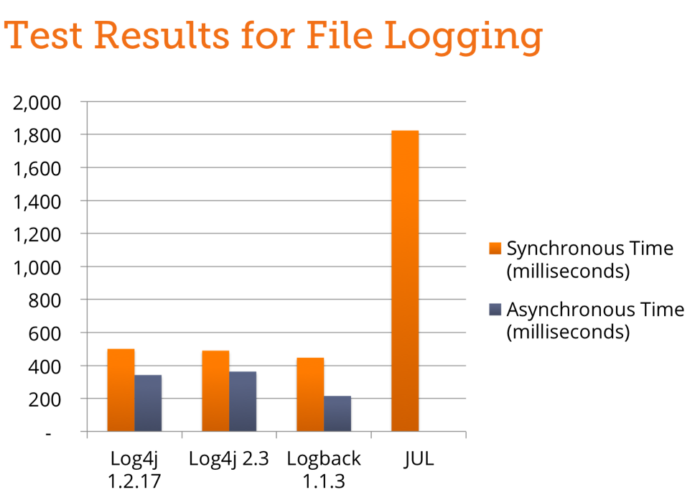

File Appender

Logback came ahead in synchronous file logging, performing 9% faster than Log4j 2.3 and 11% faster than Log4j 1.2.17. All three frameworks significantly outperformed JUL, which took over four times as long as Logback.

Using asynchronous appenders, run times decreased noticeably. Logback once again showed the highest performance but dropped most of its log events in order to do so—76%! None of the other frameworks dropped any events running synchronously or asynchronously. This is due to the behavior of Logback’s AsyncAppender, which drops events below WARNING level if the queue becomes 80% full. Log4j 1.2.17 saw improved run times while managing to successfully record each event. Log4j 2.3 saw an increase in performance over the synchronous appender, but came third after Log4j 1.2.17.

| Framework | Time (ms) | Drop Rate | |

| Synchronous | Log4j 1.2.17 | 501 | 0% |

| Log4j 2.3 | 490 | 0% | |

| Logback 1.1.3 | 447 | 0% | |

| JUL | 1824 | 0% | |

| Asynchronous | Log4j 1.2.17 | 342 | 0% |

| Log4j 2.3 | 363 | 0% | |

| Logback 1.1.3 | 215 | 76% |

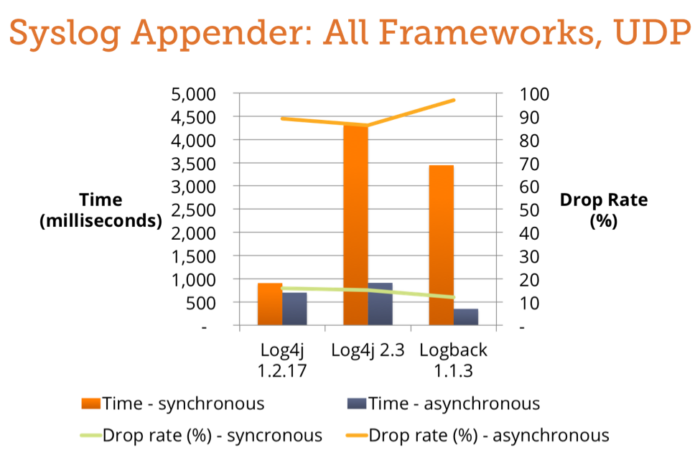

Syslog Appender

UDP

Using UDP, each framework experienced a similar rate of dropped messages due to packet loss. While Log4j 1.2.17 was the fastest, it also experienced the highest drop rate. Compared with Log4j 1.2.17, Log4j 2.3 saw a 1% improvement in dropped messages with a 9% drop in performance. SLF4J provided a somewhat more reliable experience for a substantial drop in performance.

Using an asynchronous appender resulted in a much shorter run time but also a much higher drop in reliability. The most striking difference came for Logback, which ran nearly 10 times faster but had eight times the number of dropped events.

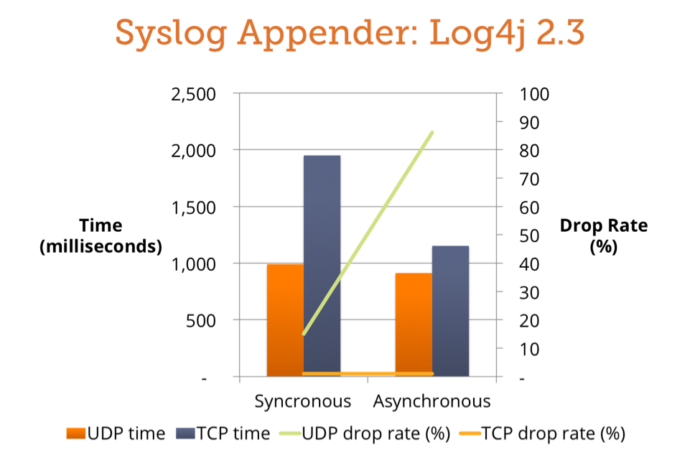

TCP

As expected, TCP with Log4j 2.3 proved to be a much more reliable transmission method. We saw a small number of dropped messages, but it was negligible when compared with UDP. The cost of this higher reliability is a run time that’s nearly twice as long.

With an asynchronous appender, we saw a decent boost in performance with no drop in throughput.

| Framework | Time (ms)—UDP | Drop Rate—UDP | Time (ms)—TCP | Drop Rate—TCP | |

| Synchronous | Log4j 1.2.17 | 908 | 16% | – | – |

| Log4j 2.3 | 991 | 15% | 1950 | < 1% | |

| Logback 1.1.3 | 3446 | 12% | – | – | |

| Asynchronous | Log4j 1.2.17 | 701 | 89% | – | – |

| Log4j 2.3 | 913 | 86% | 1153 | < 1% | |

| Logback 1.1.3 | 353 | 97% | – | – |

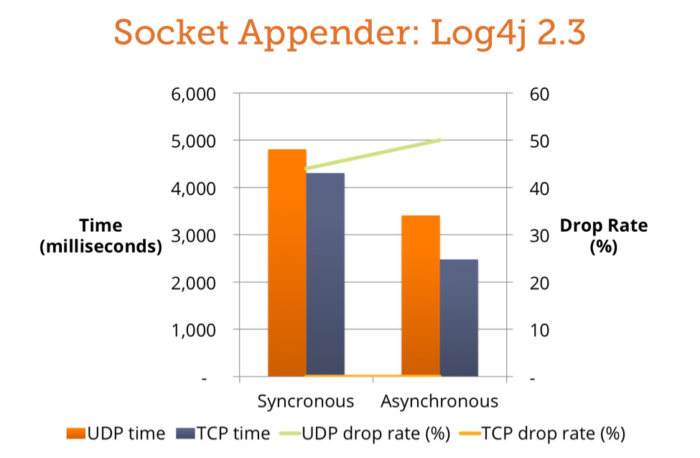

Socket Appender

UDP

Log4j 2.3’s socket appender was the slowest combination we tested. It was also one of the most error prone, dropping 44% of the events sent to it.

Using an asynchronous appender provided an almost 30% improvement in performance but with a 6% decrease in reliability.

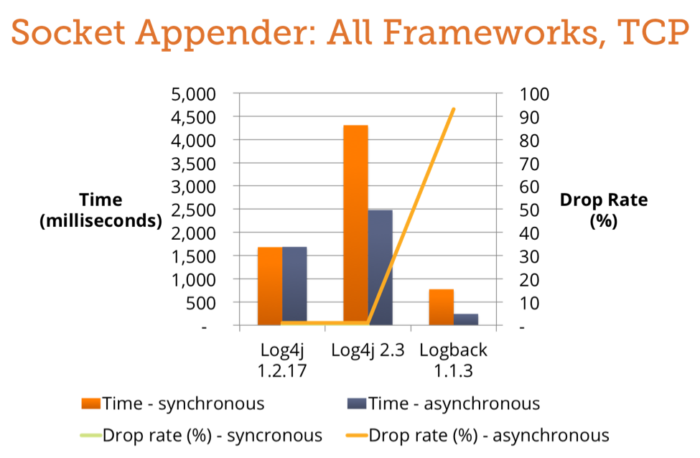

TCP

Log4j 1.2.17 showed a nearly 3-second improvement over Log4j 2.3 when using TCP. However, the star of the show is Logback, which managed to perform in less than one-fifth the time of Log4j 2.3.

When the application is logging asynchronously, Log4j 2.3 showed a marked improvement. Log4j 1.2.17 maintained its run time, but showed a small increase in the number of dropped events. Logback maintained its performance lead, but in doing so dropped over 90% of events.

| Framework | Time (ms)—UDP | Drop Rate—UDP | Time (ms)—TCP | Drop Rate—TCP | |

| Synchronous | Log4j 1.2.17 | – | – | 1681 | 0% |

| Log4j 2.3 | 4810 | 44% | 4306 | 0% | |

| Logback 1.1.3 | – | – | 774 | 0% | |

| Asynchronous | Log4j 1.2.17 | – | – | 1687 | < 1% |

| Log4j 2.3 | 3410 | 50% | 2480 | 0% | |

| Logback 1.1.3 | – | – | 243 | 93% |

Conclusion

The combination that we found to offer the best performance and reliability is Log4j 1.2.17’s FileAppender using an AsyncAppender. This setup consistently completed in the fastest time with no dropped events. For raw performance, the clear winner was Logback’s FileAppender using an AsyncAppender.

There’s often a trade-off between fast and reliable logging. Logback in particular maximized performance by dropping a larger number of events, especially when we used an asynchronous appender. Log4j 1.2.17 and 2.3 tended to be more conservative but couldn’t provide nearly the same performance gains.

Other Resources:

- Log4J 2: Performance Close to Insane (Christian Grobmeier)

- The Logging Olympics (Takipi)

The Loggly and SolarWinds trademarks, service marks, and logos are the exclusive property of SolarWinds Worldwide, LLC or its affiliates. All other trademarks are the property of their respective owners.

Andre Newman