8 Handy Tips to Consider When Logging in JSON

Logging in JSON transforms your logs from raw text lines to a database of fields you can search, filter, and analyze. This gives you way more power than you would get with only raw logs.

Why Use JSON?

JSON makes it easier to search and analyze your data. Say this is a portion of your log file:

I want to figure out how many 29 year olds are from San Francisco. This may look familiar to people who are used to dealing with raw unstructured logs:

With the above approach, you’ll end up with log entries that include any text that has “29” in it, including the date for Fred who is only 21 years old. So then you end up with an incorrect count, or an even more complicated command.

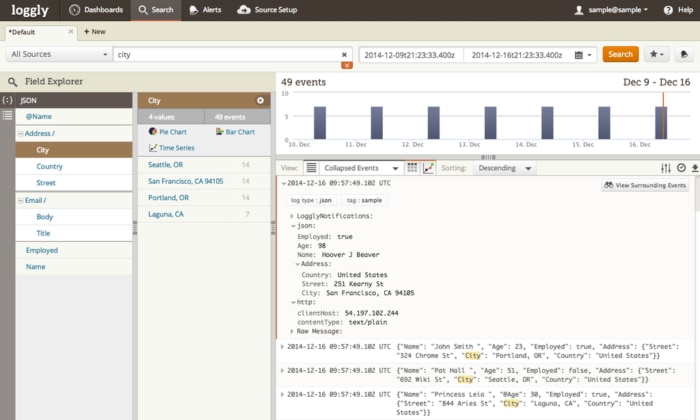

Log management tools like Loggly can automatically parse and analyze JSON data. Using Loggly Dynamic Field ExplorerTM, you can click on any field such as the city, and you’ll see a count for each on the left hand side. This transforms a complicated search command into a single click. You also unlock powerful features like numeric range searches, statistical trend analysis, and more.

Tips for Logging in JSON

Here are some tips and choices to consider when implementing logging in JSON.

Tip #1: Write New Apps With JSON

if you’re writing a new application, write JSON logging into it in the beginning. It’s always more work to go back and change an existing application to support JSON.

Tip #2: Log Both Errors and Behavior

When you add a product feature, include enough information to log both errors (especially user-facing errors) and behavior or usage. We do this at Loggly, and we have found that having both pieces of data is useful not only for analytics but also debugging (you can look at everything that led up to a problem). It’s helpful for customer support because our team can see what the customer was doing when they had a problem. And as a product manager, it’s really satisfying to be able to track usage at a feature level because I can make much smarter decisions about where to invest in our product.

Tip #3: Add Context to Your Logging Statements

Many of the Loggly libraries are able to grab contextual information about the program that’s generating your logs. For example, you can capture the name of program, hostname, level or severity of log message, name of file or class where error occured, or information about current request/ transaction. Libraries like Log4j will add this automatically, and you can add extra context using NDC fields.

You should also log easily searchable ID numbers, such as a request ID, user ID, session ID, or transaction ID. This is really useful for transaction tracing where you want to see how one transaction was processed by several internal components or services. If you have a service that returns some sort of error message to the client, include an identifier for that request. That way, you can isolate which request lead to which error.

Tip #4: Include Valuable Fields

In a previous blog post, I shared some data on which JSON fields get the most searches from Loggly customers (based on a sample of 60,000 searches). The top three were:

- json.level or json.severity to instantly filter your logs to show only the events that are errors or warnings

- json.appID, json.AppName, json.microservice, or json.program to easily isolate log messages from specific apps or microservices

- json.environment, json.deployment, or json.platform to tell which logs are coming from QA, staging, or production systems

Tip #5: Always Choose One Data Type per Field

In order use our field search, you can only have one data type per field: string, number, or JSON object. So you’ll need to choose one and stick with it. While JSON does not impose any limitations per se, the Loggy indexer cannot operate properly if you switch back and forth between types. You’ll still be able to search data in Loggly using full text search but not field searches.

Tip #6: Choose Your Data Types

JSON supports several data types including strings, numbers, and nested JSON objects. If you put quotes around a number, we will interpret it as a string. If you accidentally quote your numbers, you might wonder why your range searches or trends aren’t working.

Also, the JSON standard calls for double quotes. If you send single quotes, Loggly will interpret it as a plain string. Some of libraries such as our Javascript library will automatically convert object literals to JSON.

Some of the libraries support the ability to switch between JSON and string logs, and some do not. Logback and log4j can support both types of data. Log4net forces you to pick one or the other, so you’d have to convert all your logs to JSON.

Tip #7: Watch How Many Fields You Create

Use of fields makes your searches faster because we can index those fields for quick lookup, and show your summary counts of the values. Think of them like table columns or dimensions. You don’t want too many (especially if not important) or else you will have too much noise when navigating. Store highly variable data such as numbers as part of the values instead of as part of the field name.

You should denormalize your set of JSON field names, just as you would do when designing database columns. For example, JSON events like the following may result in a very large number of unique fields, one for each possible error code:

Instead generate JSON like this, so there is only three unique fields or keys:

All paying customers have a minimum of 100 unique fields that will be automatically parsed and indexed. The actual number is dynamically determined based on your volume and plan tier. Regardless of whether you hit your limit or not, all event text is fully indexed as far as search is concerned, so you can always find your events via free-text search.

Tip #8: Anticipate Future Analysis

Enable near-term troubleshooting requirements but also anticipate what context you might want down the road. If you’ll want to analyze longer-term trends like whether the error rate has gone down over the past three months, you’ll want to start logging that information now so that you have it when you want to compare. Err on the side of having too much logging rather than too little because you can easily filter that information out in search. Once the information about the state of your application is gone, it’s impossible to get back.

Getting Started with JSON

You can convert your apps from plain text logs into JSON. Many of our logging libraries support JSON out of the box. For example, if you are using our JavaScript library you can simply create a JSON object and then send it to Loggly.

Using Your JSON Log Data

One common use case that serves a quick way to demonstrate the power of JSON is a summary of top errors. With this summary you can:

- Monitor how a new code release is going

- See immediately if new errors were introduced by the release

- Prioritize ongoing maintenance work

- Keep everyone focused on delivering a great experience

It’s well worth it to get onboard with JSON logging.. with Loggly’s free trial if you haven’t done so already!

Share This Post

Do you know anyone else that could benefit from these 8 JSON tips? Pass this post along!

The Loggly and SolarWinds trademarks, service marks, and logos are the exclusive property of SolarWinds Worldwide, LLC or its affiliates. All other trademarks are the property of their respective owners.

Jason Skowronski